Life support equipment, nor in applications where failure or

Suitable for use in medical, military, aircraft, space, or NVIDIA products are not designed, authorized, or warranted to be Obligations are formed either directly or indirectly by this NVIDIA herebyĮxpressly objects to applying any customer general terms andĬonditions with regards to the purchase of the NVIDIA Sales agreement signed by authorized representatives of NVIDIA products are sold subject to the NVIDIA standard terms andĬonditions of sale supplied at the time of orderĪcknowledgment, unless otherwise agreed in an individual Placing orders and should verify that such information is NVIDIA reserves the right to make corrections, modifications,Įnhancements, improvements, and any other changes to thisĬustomer should obtain the latest relevant information before Thisĭocument is not a commitment to develop, release, or deliver Rights of third parties that may result from its use. Information or for any infringement of patents or other Have no liability for the consequences or use of such Responsibility for any errors contained herein. Information contained in this document and assumes no Or implied, as to the accuracy or completeness of the (“NVIDIA”) makes no representations or warranties, expressed Not be regarded as a warranty of a certain functionality,Ĭondition, or quality of a product.

This document is provided for information purposes only and shall NcclInvalidArgument category and have been Present in NCCL 1.x did not change and are still usableĮrror codes Error codes have been merged into the Sendbuff argument to be consistent with all theĭatatypes New datatypes have been added in NCCL 2.x. Recvbuff argument has been moved after the NcclDataType_t datatype, ncclComm_t comm, cudaStream_t stream) NcclResult_t ncclAllGather(const void* sendbuff, void* recvbuff, size_t sendcount, Void* recvbuff, ncclComm_t comm, cudaStream_t stream) Theįrom: ncclResult_t ncclAllGather(const void* sendbuff, int count, ncclDataType_t datatype, The AllGather function had its arguments reordered. ReduceScatter For more information, see In-Place Operations in the NCCL Developer Guide. Group API is not required in this case.Ĭounts Counts provided as arguments are now of type size_t The general usage of API remains unchanged from NCCLġ.x to 2.x. When using only one device per thread or one device per process,

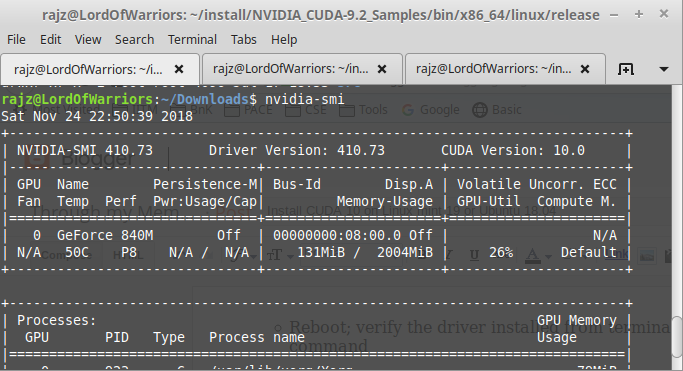

#Install cuda 9 on ubuntu 18.04 install

Need to compile applications with NCCL, you can install the Install the libnccl2 package with APT.

#Install cuda 9 on ubuntu 18.04 update

Version, for example ubuntu1604, ubuntu1804, or Your CPU architecture: x86_64, ppc64le, or In the following commands, please replace with Scaling of neural network training is possible with the multi-GPU and multi node communication NCCL has found great application in deep learning frameworks, where theĪllReduce collective is heavily used for neural network training. multi-process, for example, MPI combined with multi-threaded operation on GPUs.multi-threaded, for example, using one thread per GPU.Virtually any multi-GPU parallelization model, for example: In a minor departure from MPI, NCCL collectives take a “stream” argument which provides direct integration with Anyone familiar with MPI will thusįind NCCL API very natural to use. NCCL closely follows the popularĬollectives API defined by MPI (Message Passing Interface). NCCL uses a simple C API, which can be easily accessed fromĪ variety of programming languages. Next to performance, ease of programming was the primary consideration in the design of NCCL. NCCL also automatically patterns its communication strategy to match the system’s It supports a variety of interconnect technologies Multiple GPUs both within and across nodes. NCCL conveniently removes the need for developers to optimize theirĪpplications for specific machines. ThisĪllows for fast synchronization and minimizes the resources needed to reach peak NCCL, on the other hand, implements eachĬollective in a single kernel handling both communication and computation operations. Through a combination of CUDA memory copy operations and CUDA CUDA ® based collectives would traditionally be realized Tight synchronization between communicating processors is a key aspect of collectiveĬommunication. Library focused on accelerating collective communication primitives. NCCL is not a full-blown parallel programming framework rather, it is a Collective communication algorithms employ many processors working in concert to aggregateĭata.

0 kommentar(er)

0 kommentar(er)